Published yesterday, the European Democracy Action Plan (“EDAP”) is finally here – what does the plan promise, can it significantly address the problem of disinformation? And what do we hope for from the Digital Services Act (“DSA”) coming next week?

Recent research by Avaaz on disinformation related to the US2020 elections is clear: disinformation is still widespread and has the potential to change public opinion. The framework composed of the EDAP and the DSA could be groundbreaking in finally holding platforms accountable for the harm created by the disinformation they amplify, with clear goals and independent oversight.

But that will only happen if the EU upholds its promise to defend the democratic interests of its citizens over the interests of Big Tech. The next few months will be key for the implementation of details, and we believe that an effective framework has to be based on two central propositions: Transparency for All (for users and regulators alike) and Accountability for the Algorithm – creating standardised metrics and annual goals, monitored and enforced by a regulatory authority.

For the last three years, Avaaz has been one of the leading organizations – in the EU and around the world – reporting on disinformation and its scale, assessing the effectiveness of technical solutions the platforms have attempted. During this time, we have seen a change in the public-facing statements of the platforms, which have started to acknowledge their vulnerability to abuse by those spreading disinformation. However, we have not seen a corresponding drive from them to ensure the success of the measures they have used to control it, and the non-transparent policies they apply show a concerning lack of consistency.[1] We saw this writ large in our recent investigations during the US election.

A recent POLITICO poll told us that 70% of Republicans believe that the elections – that saw their candidate removed from office – were not free or fair, despite the lack of evidence of any fraud[2]. Why? A poll commissioned by Avaaz one week before the elections might explain the phenomenon at least in part. US voters’ social media feeds were full of stories designed to cause distrust in the system. Our poll, conducted among US registered voters (Republicans and Democrats) in the lead-up to the election, showed at that moment, 85% of them confirmed they had seen misinformation that “Mail-in voting will lead to an increase in voter fraud.” 76% had seen it on the internet, and 68% of those had seen it on Facebook. 41% who had seen it, believed it to be true.

And if that is not a surprise, how easy is it to spread misinformation like that on social media?

According to what Avaaz has found, quite easy. Simple tweaks to a piece of misinformation – that had already been fact-checked by Facebook’s own fact-checking partners – can fool the moderation system so that any labelling they have introduced to let the reader know to check the facts, fails.

Compare this:

To this:

While the example on the left was labelled and directed users to a fact-check, the one on the right (identical, with more engagement), had no label at all. All it takes is a colour change, it seems. Now, if Facebook can use image detection in its copyright protection measures, why can’t it do so to identify content it already knows is false?

In fact, of the fact-checked misinformation we analysed, about 40% was circumventing Facebook’s own policies and remained on the platform without a label.

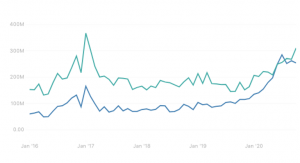

The algorithmic design also plays an important role. It’s clear that without active demotion by the platforms, the effect of the algorithm is to amplify their reach beyond a marketer’s dreams. In the months before the US election, Avaaz identified 100 large pages and groups which had shared misinformation a minimum of 3 times in the last year – misinformation meaning content fact-checked and confirmed as false by Facebook’s own fact-checking partners.

The graph below shows the upward curve of their reach, as their divisive content boosted interactions, skyrocketing from the beginning of the pre-election period election in early 2020, tripling their monthly interactions, and catching up with the top 100 US media pages on Facebook in the month prior to the election. In terms of the amount of interactions garnered in July/August 2020, during the anti-racism protests, the large pages that had shared misinformation 3+ times surpassed the top US media outlets in terms of engagement.

Jan 2016 – October 2020 (monthly interactions of 100 repeat misinformers compared to US media) Blue line represents pages that had shared misinformation, green line represents top 100 media pages.

Jan 2016 – October 2020 (monthly interactions of 100 repeat misinformers compared to US media) Blue line represents pages that had shared misinformation, green line represents top 100 media pages.

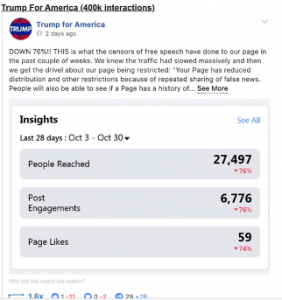

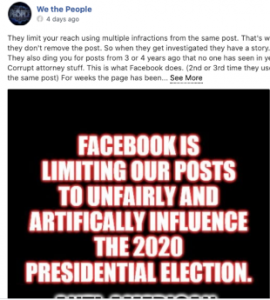

Our team shared these findings with Facebook in mid-October, as well as lists of pages from previous investigations. After October 20th, we saw a slight dip in reach in the graph and some of the pages flagged to the platform began to complain about significant reductions in their reach – which could be the result of Facebook taking action to demote them.

The data shows that the platforms can affect the reach of the disinformation, once they are on notice of their activities, but they do so without any transparency on the action they took – either to us, or, as is evident from the complaints of some of those we flagged, the content creators as you can see in the three examples below.

This lack of transparency lies at the heart of our concerns over free speech. No matter how noble the platform’s intent, their actions represent a wholesale privatisation of the regulation of speech in this crucial public sphere. Such non-transparent actions are neither effective against disinformation, and represent a far greater threat to freedom of speech than open, fair and accountable regulation ever could.

Which brings us back to EDAP – and what it can do to protect democracy all over the Union.

In our discussions with the Commission over the past few months we put two central propositions to combat the amplification of verifiably false and misleading content to a scale where it holds the potential to cause public harm. The propositions are Transparency for All and Accountability for the Algorithm.

The EDAP proposal presented by the Commission suggests that these two concepts could become fundamental pillars of the EU’s action against disinformation but it is going to depend on the details of the implementation in the next few months. Transparency and accountability: but of what, and to whom?

Transparency for All

Transparency with users who have seen disinformation

Platforms should be transparent with all users about fact-checked disinformation that they are aware of, informing all users who have been exposed to disinformation through retroactive notifications. The EDAP states it will work to “adequate visibility of reliable information of public interest” and we hope this will be translated into consistent labelling measures.

Fact-checking can be a game-changer in tackling disinformation, but only if it is transparently communicated to users. Avaaz investigations revealed delays in labelling, and a huge imbalance in moderation between English and other languages. All of this means that millions of users who see disinformation will never know it was false even when the platform itself is aware. Research[3] demonstrates that corrections are effective in reducing belief in disinformation by an average of almost 50 percent; making these fully transparent to users is imperative.

Users should be made aware of the decisions platforms take, and why

For example if an actor they have engaged with has been demoted or removed for violating the platform’s terms of use, both users and content creators should know why. Fake accounts should be removed, and automated accounts, such as bots, must be clearly labelled. We wholeheartedly support EDAP’s promise to render full transparency for sources of advertising content laid out in Section 1 of the plan – and welcome their understanding that boosted organic content is a big part of that. This promise must be followed through in the Code of Practice to provide similar transparency on sources of disinformation.

Open the Algorithm Black Box

Regulation will not succeed if conceived of as a “whack-a-mole” activity focussed on takedowns of content. The black box that holds the design of the algorithms running the recommender and moderation systems of the platforms must be opened to inspection to allow assessment of their societal impacts. Platforms should also allow regulators, accredited researchers and institutions sufficient access to evaluate them independently.

There is not much detail in EDAP on transparency for the algorithm and so we must await the DSA for more detail. We are hoping to see users also afforded the right to be informed about what kind of data mining is used to feed them the content they see and to be given the option to turn off algorithmic selection, or to choose which elements of it they wish to enable, just as they have rights to tailor the data collection they allow under GDPR, similar to the cookies opt-out.

Accountability for the algorithm

Measurable targets

Transparency will have little effect without accountability and the creation of measurable targets rightly lies at the heart of a successful EDAP.

Platforms must become accountable for their algorithmic amplification of disinformation and other harmful content and the sources of such disinformation. Under the supervision of a regulator, with participation by the platforms, standardised goals with clear actions and metrics should be set to assess achievement of these goals. Failure to meet them, once established, should have regulatory consequences.

Also, the industry needs to be held responsible for mitigating the societal harms that they create – assessing their vulnerabilities on a regular basis, and allowing independent auditors to propose mitigation actions they will need to take, with regulatory supervision.

Stakeholder participation

Industry stakeholders should be involved in defining the metrics and the disinformation reduction targets: fact-checkers, researchers, civil society organizations, and academic institutions and should be involved in an ongoing process, not just during limited “public consultations”. EDMO’s participation as proposed by EDAP could coordinate this input, but should not supplant it.

In summary: what can EDAP achieve against disinformation?

Overall the combination of the EDAP and the DSA is exactly the right framework and it could be groundbreaking. It can hold platforms accountable to act on disinformation and protect freedom of speech by ensuring a) transparency – to regulators and users and b) accountability for the algorithm. This is a huge move forward, both from the stalled duty of care approach in the UK and the repressive models based on penalising the content creators we have seen in recent years in some countries (Hungary, Turkey, Russia). If it’s backed with a strong back-stop regulator – with effective enforcement reach over these global corporations, it could reverse the amplification of disinformation that has disrupted elections and nearly overwhelmed our response to COVID.

But that’s a big “if”, and the DSA has much to do in clarifying how the regulation outlined in EDAP will bite. Europe’s Democracy Action Plan could be the first step towards a Paris Agreement for Disinformation, holding platforms accountable — or it could remain an ineffective statement of intent, where platforms keep reporting on the metrics they want and as such are always succeeding. It’s up to the Union, and Commissioner Jourová, to decide which path to take, in the next few months. And Jourová has the backing of European citizens: 85% across key [4]countries want social media regulation.

[1] https://www.theguardian.com/technology/2020/oct/30/facebook-leak-reveals-policies-restricting-new-york-post-biden-story

[2] https://www.politico.com/news/2020/11/09/republicans-free-fair-elections-435488

[3] https://secure.avaaz.org/campaign/en/correct_the_record_study/